In bioinformatics, data mining and machine learning are powerful tools used to extract patterns, insights, and predictions from biological data. Here’s a breakdown:

Data Mining in Bioinformatics:

Data mining involves exploring large datasets to discover meaningful patterns, relationships, or structures within the data. In bioinformatics, this typically involves extracting knowledge from biological datasets, such as genomic sequences, protein structures, or drug interactions. Some common data mining techniques used in bioinformatics include:

- Clustering: Grouping similar biological entities together based on certain features, like clustering genes with similar expression patterns.

- Classification: Assigning biological entities to predefined categories based on characteristics, such as classifying proteins into functional families.

- Association Rule Mining: Identifying relationships or associations between biological variables, like finding frequent patterns of co-occurring genetic variations.

- Feature Selection: Identifying the most relevant features or variables within a dataset, crucial for building accurate predictive models.

Machine Learning in Bioinformatics:

Machine learning involves developing algorithms that enable computers to learn from data and make predictions or decisions without explicit programming. In bioinformatics, machine learning techniques are applied to analyze biological data and make predictions. Some common machine learning applications in bioinformatics include:

- Genomic Sequence Analysis: Predicting gene function, identifying regulatory elements, or classifying sequences into functional categories.

- Protein Structure Prediction: Predicting the three-dimensional structure of proteins or their interactions with other molecules.

- Drug Discovery: Predicting drug-target interactions, designing new compounds, or identifying potential drug candidates.

- Disease Diagnosis and Prognosis: Developing models for disease diagnosis, prognosis, or identifying biomarkers associated with specific conditions.

- Personalized Medicine: Tailoring treatments or therapies based on individual genetic profiles, leveraging machine learning to analyze patient data.

Challenges and Advancements:

- Data Quality and Quantity: Bioinformatics deals with large and diverse datasets, often with noise and missing values, requiring careful preprocessing.

- Interpretability: Understanding the biological significance of machine learning predictions or patterns is crucial for practical applications.

- Ethical Considerations: Ensuring responsible use of machine learning in bioinformatics, especially concerning sensitive biological information.

Advancements in machine learning algorithms, coupled with the availability of vast biological datasets, continue to revolutionize bioinformatics, aiding in understanding complex biological systems, drug development, personalized medicine, and more.

Data Mining and Machine Learning in Bioinformatics

Application of machine learning in Bioinformatics

Machine learning techniques are widely applied in bioinformatics for various purposes, leveraging methods like classification, clustering, and more. Here’s how they’re used:

1. Classification:

- Gene Function Prediction: Classifying genes based on sequences or expression patterns to infer their functions, essential for understanding biological processes.

- Disease Diagnosis: Building models to classify diseases or conditions based on genetic markers, biomarkers, or patient data, aiding in accurate diagnoses.

- Drug Target Prediction: Classifying molecules or proteins to predict potential drug targets, assisting in drug discovery and development.

2. Clustering:

- Gene Expression Analysis: Clustering genes or samples based on expression profiles to identify co-regulated genes or distinct biological conditions.

- Protein Families: Clustering proteins to group them into families based on sequence similarity, aiding in understanding their functions and evolutionary relationships.

3. Regression:

- Quantitative Trait Prediction: Predicting quantitative traits based on genetic variations or environmental factors, contributing to understanding complex traits.

4. Feature Selection:

- Identifying Biomarkers: Selecting the most relevant features (genes, proteins, etc.) associated with a disease or condition, crucial for biomarker discovery.

5. Neural Networks and Deep Learning:

- Protein Structure Prediction: Using deep learning models to predict protein structures from amino acid sequences, aiding in understanding protein function and drug design.

- Image Analysis: Analyzing biological images, such as microscopy images or medical scans, for cell classification, disease detection, etc.

6. Network Analysis:

- Biological Network Modeling: Analyzing and modeling biological networks, such as gene regulatory networks or protein-protein interaction networks, to understand system-level behaviors.

7. Text Mining and Natural Language Processing (NLP):

- Literature Mining: Extracting information from scientific publications or databases for knowledge discovery, identifying relationships between genes, diseases, or drugs.

Challenges and Advancements:

- Data Integration: Integrating multiple types of omics data for comprehensive analysis.

- Interpretability: Ensuring models are interpretable and understanding the biological relevance of predictions.

- Ethical Considerations: Addressing ethical issues related to privacy, consent, and responsible use of biological data in machine learning applications.

Overall, machine learning plays a pivotal role in bioinformatics by enabling data-driven discoveries, aiding in understanding biological systems, disease mechanisms, drug development, and personalized medicine.

>>>>>>>>>>See the Learn ML Course on this website<<<<<<<<<<

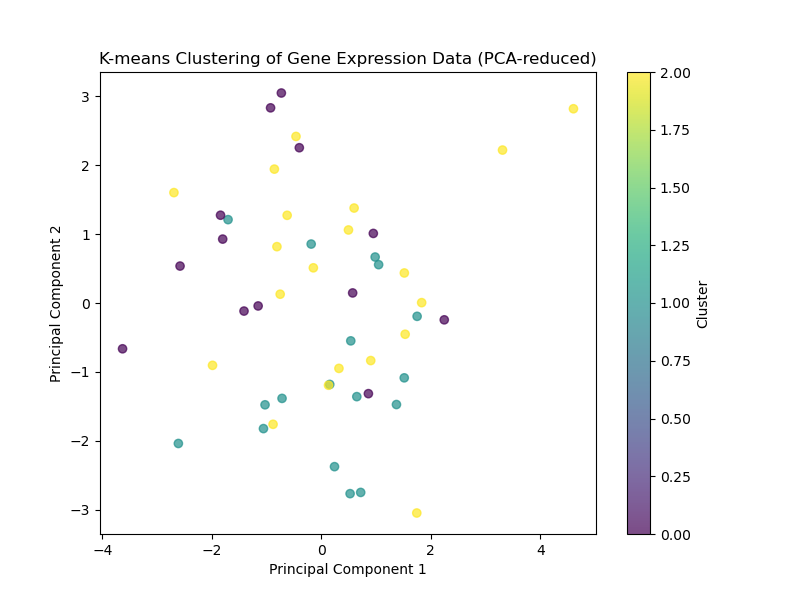

This is an example of clustering gene expression data in Python:

import pandas as pd

import numpy as np

from sklearn.cluster import KMeans

from sklearn.decomposition import PCA

import matplotlib.pyplot as plt

# Generating synthetic gene expression data (replace this with your actual data)

np.random.seed(42)

genes = 1000

samples = 50

expression_data = np.random.rand(genes, samples)

# Creating a DataFrame for the gene expression data

gene_names = [f'Gene_{i}' for i in range(genes)]

sample_names = [f'Sample_{i}' for i in range(samples)]

expression_df = pd.DataFrame(expression_data, index=gene_names, columns=sample_names)

# Performing PCA for dimensionality reduction

pca = PCA(n_components=2)

pca_result = pca.fit_transform(expression_df.transpose())

# Performing K-means clustering (assuming k=3 clusters)

k = 3

kmeans = KMeans(n_clusters=k, random_state=42)

cluster_assignments = kmeans.fit_predict(expression_df.transpose())

# Visualizing the clustering results in the reduced space

plt.figure(figsize=(8, 6))

plt.scatter(pca_result[:, 0], pca_result[:, 1], c=cluster_assignments, cmap='viridis', alpha=0.7)

plt.title('K-means Clustering of Gene Expression Data (PCA-reduced)')

plt.xlabel('Principal Component 1')

plt.ylabel('Principal Component 2')

plt.colorbar(label='Cluster')

plt.show()Which outputs:

The code generates synthetic gene expression data, creates a Pandas DataFrame with the expression values, applies K-means clustering assuming three clusters, and visualizes the clustering results using a scatter plot of the first two principal components.

For real gene expression data, typically data is loaded from a file or a database and then perform clustering using similar steps. Adjustments in parameters, such as the number of clusters (k in this case), may be required based on the characteristics of your data.

Preprocessing the data appropriately, handling missing values, normalizing, or scaling features as necessary is important before performing clustering analysis.

Feature selection and dimensionality reduction in biological data

In biological data analysis, feature selection and dimensionality reduction are crucial techniques to handle high-dimensional datasets and extract relevant information. Here’s an explanation of both:

Feature Selection:

Biological datasets often contain a vast number of features (genes, proteins, etc.), and not all features contribute equally to the analysis. Feature selection aims to identify the most informative and relevant features while excluding redundant or less important ones.

- Importance of Feature Selection:

- Enhanced Model Performance: By focusing on the most relevant features, models can be more accurate and efficient.

- Reduced Overfitting: Using fewer features can help prevent overfitting, where a model learns noise instead of true patterns.

- Interpretability: It aids in understanding the biological significance of the selected features.

- Techniques for Feature Selection:

- Filter Methods: These methods use statistical measures to rank features based on their relevance to the target variable. Examples include correlation analysis, chi-square tests, or information gain.

- Wrapper Methods: These methods involve training a model and selecting features based on their impact on the model’s performance (e.g., forward/backward selection, recursive feature elimination).

- Embedded Methods: These techniques perform feature selection as part of the model training process, like LASSO (Least Absolute Shrinkage and Selection Operator) regularization.

Dimensionality Reduction:

High-dimensional biological data, such as gene expression or protein profiles, can suffer from the curse of dimensionality, where the abundance of features can lead to increased computational complexity and overfitting. Dimensionality reduction techniques aim to project high-dimensional data into a lower-dimensional space while preserving important information.

- Purpose of Dimensionality Reduction:

- Computational Efficiency: Reducing the number of dimensions can make computations faster and more manageable.

- Visualization: Visualizing data in lower dimensions (such as 2D or 3D) facilitates better understanding and interpretation.

- Noise Reduction: It helps in filtering out irrelevant or noisy features, improving the quality of the analysis.

- Techniques for Dimensionality Reduction:

- Principal Component Analysis (PCA): It identifies orthogonal axes that capture the maximum variance in the data.

- t-Distributed Stochastic Neighbor Embedding (t-SNE): It’s useful for visualizing high-dimensional data in low-dimensional space while preserving local structures.

- Linear Discriminant Analysis (LDA): Particularly useful for supervised dimensionality reduction, aiming to maximize class separability.

Applications in Biological Data:

These techniques are applied extensively in bioinformatics for various tasks:

- Identifying genes or proteins associated with diseases or traits.

- Visualizing high-dimensional data for pattern recognition.

- Preprocessing data before building predictive models for drug discovery or personalized medicine.

Both feature selection and dimensionality reduction play pivotal roles in uncovering meaningful insights from complex biological datasets, aiding in better understanding biological mechanisms and making more accurate predictions or classifications.

There is an example for dimensionality reduction in the Learn ML Course on this site.